blogs

Optimizing Performance: Key Techniques for Scalable Systems

In today’s digital landscape, it’s not just about building functional systems; it’s about creating systems that scale smoothly and efficiently under demanding loads. But as many developers and architects can attest, scalability often comes with its own unique set of challenges. A seemingly minute inefficiency, when multiplied a million times over, can cause systems to grind to a halt. So, how can you ensure your applications stay fast and responsive, regardless of the demand?

In this newsletter, we’ll delve deep into the world of performance optimization for scalable systems. We’ll explore common strategies that you can weave into any codebase, be it front end or back end, regardless of the language you’re working with. So whether you’re building the next big social network, an enterprise-grade software suite, or just looking to optimize your personal projects, the strategies we’ll discuss here will be invaluable assets in your toolkit. Let’s dive in.

Prefetching

Prefetching is a performance optimization technique that revolves around the idea of anticipation. Imagine a user interacting with an application. While the user performs one action, the system can anticipate the user’s next move and fetch the required data in advance. This results in a seamless experience where data is available almost instantly when needed, making the application feel much faster and responsive. Proactively fetching data before it’s needed can significantly enhance the user experience, but if done excessively, it can lead to wasted resources like bandwidth, memory, and even processing power. Facebook employs pre-fetching a lot, especially for their ML-intensive operations such as “Friends suggestions.”

When Should you Prefetch?

Prefetching involves the proactive retrieval of data by sending requests to the server even before the user explicitly demands it. While this sounds promising, a developer must ensure the balance is right to avoid inefficiencies.

A. Optimizing Server Time (Backend Code Optimizations)

Before jumping into prefetching, it’s wise to ensure that the server response time is optimized. Optimal server time can be achieved through various backend code optimizations, including:

- Streamlining database queries to minimize retrieval times.

- Ensuring concurrent execution of complex operations.

- Reducing redundant API calls that fetch the same data repeatedly.

- Stripping away any unnecessary computations that might be slowing down the server response.

B. Confirming User Intent

The essence of prefetching is predicting the user’s next move. However, predictions can sometimes be wrong. If the system fetches data for a page or feature the user never accesses, it results in resource wastage. Developers should employ mechanisms to gauge user intent, such as tracking user behavior patterns or checking active engagements, ensuring that data isn’t fetched without a reasonably high probability of being used.

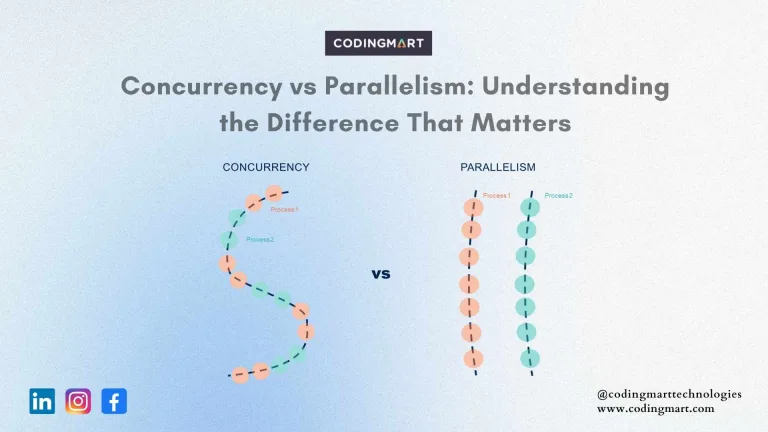

Concurrent Fetching

Concurrent fetching is the practice of fetching multiple sets of data simultaneously rather than one at a time. It’s similar to having several clerks working at a grocery store checkout instead of just one: customers get served faster, queues clear more quickly, and overall efficiency improves. In the context of data, since many datasets don’t rely on each other, fetching them concurrently can greatly accelerate page load times, especially when dealing with intricate data that requires more time to retrieve.

When To Use Concurrent Fetching?

When each data is independent, and the data is complex to fetch: If the datasets being fetched have no dependencies on one another and they take significant time to retrieve, concurrent fetching can help speed up the process.

Use mostly in the back end and use carefully in the front end: While concurrent fetching can work wonders in the back end by improving server response times, it must be employed judiciously in the front end. Overloading the client with simultaneous requests might hamper the user experience.

Prioritizing network calls: If data fetching involves several network calls, it’s wise to prioritize one major call and handle it in the foreground, concurrently processing the others in the background. This ensures that the most crucial data is retrieved first while secondary datasets load simultaneously.

Memoization

In the realm of computer science, “Don’t repeat yourself” isn’t just a good coding practice; it’s also the foundation of one of the most effective performance optimization techniques: memoization. Memoization capitalizes on the idea that re-computing certain operations can be a drain on resources, especially if the results of those operations don’t change frequently. So, why redo? what’s already been done?

Memoization optimizes applications by caching computation results. When a particular computation is needed again, the system checks if the result exists in the cache. If it does, the result is directly retrieved from the cache, skipping the actual computation. In essence, memoization involves creating a memory (hence the name) of past results. This is especially useful for functions that are computationally expensive and are called multiple times with the same inputs. It’s akin to a student solving a tough math problem and jotting down the answer in the margin of their book. If the same question appears on a future test, the student can simply reference the margin note rather than work through the problem all over again.

When should you memoize?

Memoization isn’t a one-size-fits-all solution. In certain scenarios, memoizing might consume more memory than it’s worth. So, it’s crucial to recognize when to use this technique:

- When the data doesn’t change very often: Functions that return consistent results for the same inputs, especially if these functions are compute-intensive, are prime candidates for memoization. This ensures that the effort taken to compute the result isn’t wasted on subsequent identical calls.

- When the data is not too sensitive: Security and privacy concerns are paramount. While it might be tempting to cache everything, it’s not always safe. Data like payment information, passwords, and other personal details should never be cached. However, more benign data (harmless data), like the number of likes and comments on a social media post, can safely be memoized to improve performance.

Lazy Loading

Lazy loading is a design pattern wherein data or resources are deferred until they’re explicitly needed. Instead of pre-loading everything up front, you load only what’s essential for the initial view and then fetch additional resources as and when they’re needed. Think of it as a buffet where you only serve dishes when guests specifically ask for them, rather than keeping everything out all the time. A practical example is a modal on a web page: the data inside the modal isn’t necessary until a user decides to open it by clicking a button. By applying lazy loading, we can hold off on fetching that data until the very moment it’s required.

Conclusion

In today’s fast-paced digital world, every millisecond counts. Users demand rapid responses, and businesses can’t afford to keep them waiting. Performance optimization is no longer just a ‘nice-to-have’ but an absolute necessity for anyone serious about delivering a top-tier digital experience.

Through techniques such as Pre-fetching, Memoization, Concurrent Fetching, and Lazy Loading, developers have a robust arsenal at their disposal to fine-tune and enhance their applications. These strategies, while diverse in their applications and methodologies, share a common goal: to ensure applications run as efficiently and swiftly as possible.

However, it’s important to remember that no single strategy fits all scenarios. Each application is unique, and performance optimization requires a judicious blend of understanding the application’s needs, recognizing the users’ expectations, and applying the right techniques effectively. It’s an ongoing journey of refinement and learning.